As we all are aware, it's been almost two years since the SARS-COV2 outbreak began in December, 2019. Initially, it put tremendous pressure on all countries to find out methods for identifying the infection. The most prominent methods of detecting the virus included chemical methods like RT-PCR, which were time-consuming and labor-intensive. Other faster and more automated methods like detection using AI and Deep Learning models based on X-Rays, CT-Scans and thermal imaging modalities were being explored, but they were still at nascent stages and not ready for mass adoption and deployment. The testing infrastructure could not keep up with the infections and this made a market for a variety of screening devices - devices like temperature guns and pulse oximeters that could measure a few vital parameters affected by the coronavirus.

Problems

Unfortunately, these devices were riddled with problems -

- They were extremely short-ranged which resulted in a lot of manual measurement errors as it is quite natural for the user to be cautious about the distance between the gun and the subject’s forehead to avoid any possibility of contracting the virus.

- The accuracy of spot IR devices has always been a concern due to the ample factors affecting them, including but not limited to relative humidity, Ambient temperature and emissivity.

- The introduction of indistinguishable cheap quality sensors to increase profit margins per device made these devices notorious and speculative among many users and researchers.

Solution - Theory

Design rules: I identified this problem in June, 2020 and started developing a device with the following design goals in mind:

- It should have at least 50 cm range.

- It should be capable of measuring more than just one or two vital parameters so that the screening is much more robust to external factors.

- It should be affordable

- It should be able to record all the data it measures with timestamps in a usable format (like .csv)

- It should have applicability beyond the pandemic

The biggest problem was the range and measuring multiple parameters. Measurement of core body temperature was done using IR technology which had a range of only a few cms. Pulse oximeters require touch to measure SPO2 and HR levels. Both these technologies were supposed to be inherently different, but actually they are not. Both make use of IR technologies of similar wavelengths.

Remote-PhotoPlethysmography (r-PPG): I researched the net and came across 2 ways to address these problems. I came across something called "remote-PhotoPlethysmography" or r-PPG which caught my mind. Pulse oximeters use Photoplethysmography or PPG which basically measures how much light from the source is absorbed by the finger's biological components. Since light is more strongly absorbed by blood than the surrounding tissues, the changes in blood flow can be detected by PPG sensors as changes in the intensity of light. The voltage signal from PPG is proportional to the quantity of blood flowing through the blood vessels which is called the PPG signal. The absorption is also affected by the SPO2 levels in the blood. Hence pulse oximeters can measure SPO2 and HR from the PPG signal. However, for this to work there has to be an IR emitter kept very close to the finger because of which pulse oximeters are contact-based.

PG is a novel take to this concept. It states that because sunlight contains all wavelengths, it also contains IR rays and acts as the source needed for measuring absorption. It uses a camera (generally visible) to measure the fluctuations in the form of skin colour variations. Yes, you heard right. Measuring SPO2 from your forehead or face. This could be the solution I am looking for!

HRV and HR calculations: PPG waveforms can also provide HRV or heart rate variability. This is another vital parameter that provides you with additional information about your heartbeats. Basically, it tells you how much each heartbeat time interval differs from the average. Normally, it is assumed that all heartbeats are different which is why you get an almost instantaneous result on the pulse oximeter. Like in ECG, PPG waveforms also have peaks that are used to calculate the RR interval.

Traditional HR calculation

HR= 60/(RR interval)

However, this is not true. Each RR interval is different and might be an indication of some diseases like Atrial fibrillation or Arrhythmia. In that case,

HRV = HR/60 - RR interval

For the sake of simplicity, we still calculate the HR as mentioned above, but now we also calculate how much the current beat is different from that average. If the HRV fluctuates a lot during idling, it might an indication of some disorder. Instead, if it fluctuates while exercises, it is an indication that your body is adept and responsive. Athletes have high HRV.

Calculating SPO2, HR, HRV from r-PPG signals: As mentioned here, HR and HRV is calculated by averaging out and separating all the different colour channels of the facial skin. There are very few papers that show the calculations for spo2 measurement using r-PPG, this is one of the papers that we referred to: Non-Contact Physiological Parameters Extraction Using Facial Video Considering Illumination, Motion, Movement and Vibration.

rPPG Algorithm (courtesy: https://www.noldus.com/blog/what-is-rppg)

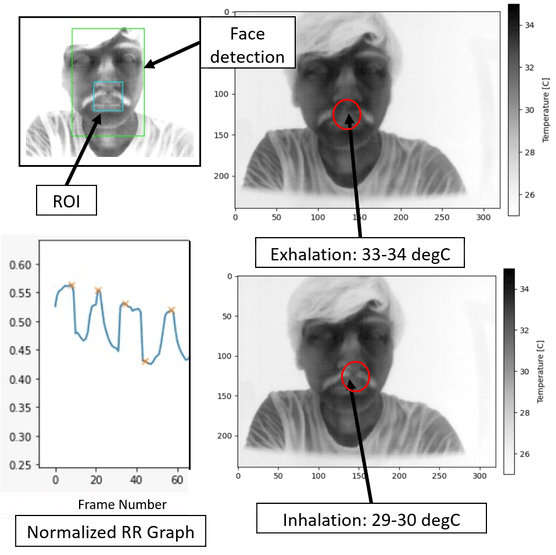

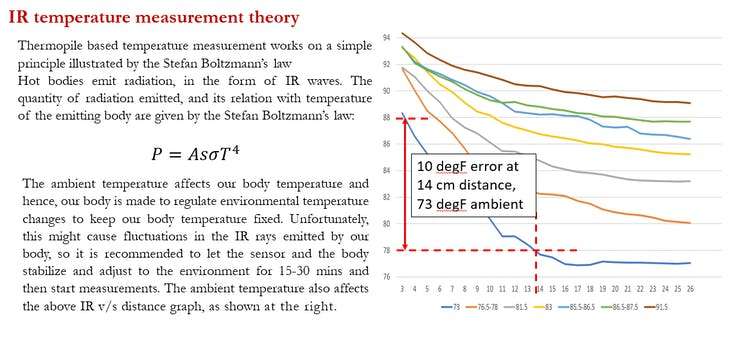

Measuring Respiratory Rate, Respiratory Rate Variability and Core Body Temperature from 50 cm: We know that IR technology is extremely dependant on the distance between the IR sensor or thermal camera and the object emitting the IR rays or the subject. We researched and developed a few algorithms that could compensate for this drop in temperature due to distance and ambient temperature. We have published our findings on IEEE Xplore here: Enhanced Pyrometric device with Long Range for mass screening based on MLX90614. This paper received the best paper award at the International conference where we presented it in India.

RRV is analogous to HRV conceptually. So now, we have ways to estimate most of the important parameters using long-range methods. Everything looks fine on paper, but lets move on next to the practical implementation of this.

Solution - Prototyping

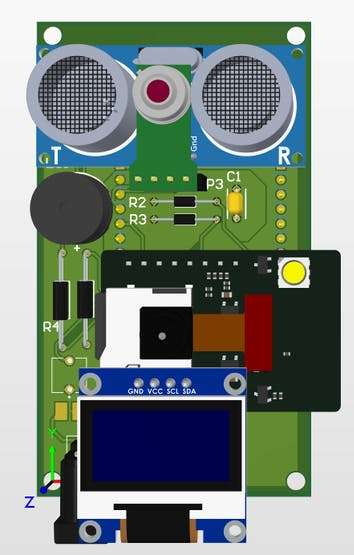

We could find a lot of papers and preliminary ideation on the previously discussed concepts but we were not able to find any code or practical implementations for these concepts. We also realized that doing everything together would require an array of NIR, LWIR and visible cameras which would make our system quite costly. Hence we finalized on measuring 3 parameters first - HR (Heart Rate), SPO2 (Saturated Oxygen) and CBT (Core Body Temperature). This was because HR and SPO2 could be measured using r-PPG and a visible imaging camera, while CBT could be measured with a pyrometric sensor which is much cheaper and easily available than a thermal camera.

BOM:

We used the following main components:

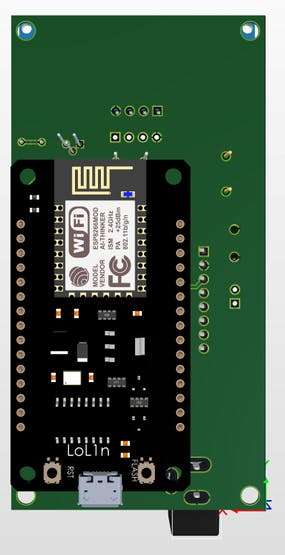

- NodeMCU Lolin: To run our regression model built on MATLAB that compensates the measured object temperature for variations due to ambient temperature and distance.

- ESP32 Cam: To take video in VGA format and transmit it wirelessly over WiFi to a central server or Desktop.

- Ultrasonic Sensor SR04: To measure the distance between the pyrometric sensor and the subject

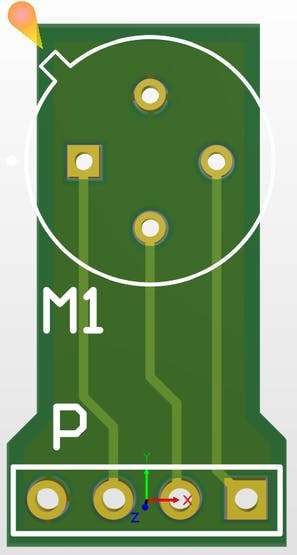

- Pyrometric sensor MLX90614: To measure the object and ambient temperature using IR technology

- 0.96" OLED Display: For displaying warnings, temperature values and other indications

- Piezo Buzzer: For Audio Alerts

- Wall Adapter or Battery: We calculated that the power requirement for our system was around 5V 1A or 5W. Hence we provided a barrel jack on the PCB so that the device could be powered using any option as needed. More on this in the Power Budget Section.

Our BOM cost amounted to less than 2581 INR or around 35$ per pc including individual component costs, taxes as well as manufacturing for the battery variant. The CSV for the BOM is attached in the attachments, the picture below shows a gist of it. (Qtty assumed: 100 pcs). If the battery is replaced by a 5V 2A Barrel jack wall adapter, the price drops even further to about 32$ as shown below.

1 / 2 • BOM for 100 Pcs (Battery Variant): BOM Cost around 258101/100 = 2581 INR or 35$

This was a great price point for a product that could measure 3 vital parameters. Certified temperature guns and pulse oximeters easily cost around 50-100$ each. Hence we moved to the designing phase.

Power Budget:

The main power is consumed by the ESP32 Cam. It consumes around 180 mA if the Flash LED is off. All these values are taken from the datasheets or reference documentation of the corresponding component.

- ESP32 Cam: 180 mA

- Node MCU: 40 mA

- SR04: 15 mA

- MLX90614: 1.5 mA

- Buzzer: 10-15mA (intermittent, not continuous)

- OLED and the remaining components: A few mA

Hence, the total current consumed is around 250 mA, which should make sure that a 1000 mAh battery lasts for around 3-4 hours of continuous usage.

Design:

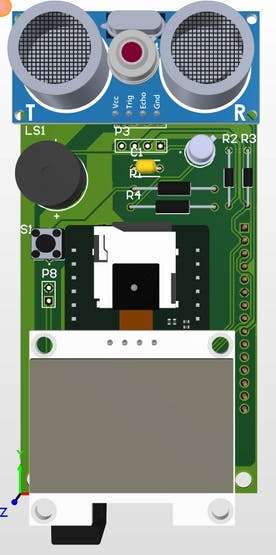

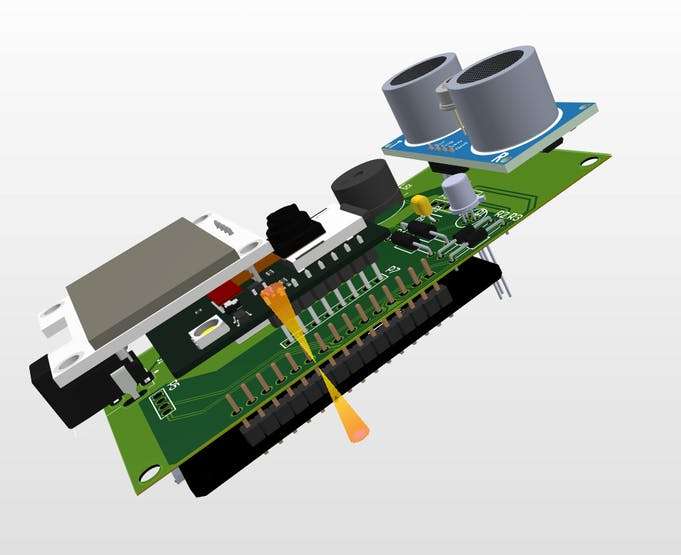

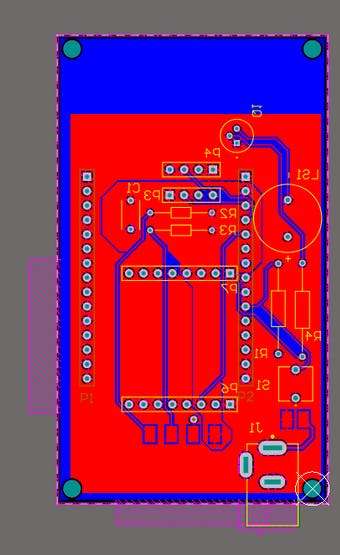

I developed my design on Altium Designer (AD20). The schematic, PCB Layout and 3D design are attached below:

The main connections are as follows:

- Node MCU D1 - SCL (Display and MLX)

- Node MCU D2 - SDA (Display and MLX)

- Node MCU D3 - ECHO (SR04 sensor)

- Node MCU D4 - GPIO12 of ESP32 Cam (wake_up GPIO)

- Node MCU D5 - Base of transistor that triggers the buzzer

- Node MCU D6 - TRIGGER (SR04 sensor)

- Node MCU D7 - Wifi AP Button (use it to change saved wifi credentials)

While the schematic is made for a supply voltage of 5V, The entire circuitry can run on either 3V with minor modifications.

Schematic

The first Layout and design iteration:

.jpg)

Simple tests on the assembled PCB:

This was the first design I developed. After assembly it looked pretty well:

Later, I made some revisions to the PCB and the case:

- First I developed a special PCB for the MLX sensors I was using, which helped me save a lot of costs because the modules were significantly more costly.

- Second, I noticed that I was using only one row of the Node MCU. Hence, using some quick tricks, I was able to design the board in such a way that despite overlapping, I could easily solder both the micro controllers.

- I also adjusted the location of the ESP32 cam so that its camera could be in the centre.

This was a better design. I now proceeded to design the casing.

The case for the above design was developed on SolidWorks. The STEP file has been attached in the attachments. A few renders from keyshot are shown below:

The manufactured PCBs and metal enclosures were ordered from local vendors:

Coding

The first problem was dividing the work. There was a whole lot of work to do and even if I used both the microcontrollers together I would not be able to run my r-PPG and other algorithms while collecting the sensor data and streaming the video feed. If I used an RPi, the cost and power requirements would significantly increase. So, I decided to divide the entire system into 3 parts:

- Sensor data collection and displaying: The Node MCU will collect data from the sensors, apply the lightweight regression model to compensate the errors in the object temperature using the distance and ambient temperature values from the sensors as input. It will also relay all this data using WiFi.

- Video Streaming: ESP32 Cam will use both its cores for collecting the video frames and simultaneously streaming them in MJPEG format using WiFi.

- Vital Parameter and screening GUI: This device will contain our python-based software that would take the data and video stream from the Node MCU and ESP32 Cam over Wifi and use OpenCV and other python libraries to process that data and display the vital parameters that we need. It will also store and record the data in CSV format and run a simple Face Recognition based attendance system.

WiFi on NodeMCU using WifiManager

So we first coded the Node MCU device. To get the WiFi password from the user, we used the Wifi manager library (https://github.com/tzapu/WiFiManager) which was very easy to set up. Here is a short demo:

The nodeMCU first searches for any saved passwords/SSIDs. If it doesn't find it, it becomes an Access Point and using the Wifi manager library you can enter your wifi credentials which the device will remember the next time you turn on the device. To change this password, press the button attached to D7 GPIO of the Node MCU.

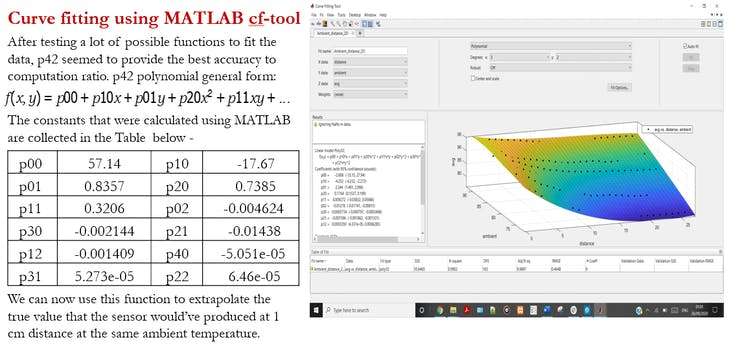

Error Compensation Algorithm on MATLAB

Next, we started developing the error compensation algorithm on MATLAB using it's curve fitting tool. Our paper contains all the necessary details for the same, please have a look at that for the detailed procedure. The images below show the gist of the results:

We directly implemented this in Arduino using the inputs from the SR04 sensor and the ambient temperature measured by the MLX sensor itself. Using these inputs, we implemented the error compensation on the object temperature and got an accuracy of 0.5 degC across 25 degC to 35 degC ambient temperatures. The range we could get was a bit less (30 cm) which was a bummer. But it was still acceptable as people generally used their fists to measure their body temperature and that gave us some extra 20 cms of range.

Data sharing through Wifi using JSON and SPIFFs

We used JSON and SPIFFs to store the WiFi Password on the NodeMCU and transmit the sensor data.

WiFi on ESP32 Cam:

We faced some issues while trying to use SPIFFs on the ESP32 Cam. So we went for a much more simplified approach for taking and saving the WiFi credentials: HTML and EEPROM respectively. This saved a lot of code and space.

Web streaming:

We came to know that the web streaming example of the ESP32 Cam is not catchable on OpenCV. Hence, we had to develop our own MJPEG based video capture and streaming pipeline. We used RTOS for grabbing frames and a separate task for sending them to all connected clients. Up to 10 clients are supported and we have tested the stream on 4 devices simultaneously. It worked well. We took reference from this wonderful repository and modified it to suit our requirements.

GUI and Software development on Laptop/Desktop device

Now we had the camera stream and corrected temperature data. For the remaining implementation, we used python libraries and dependencies to develop our own signal processing pipeline from scratch. It looks like this:

The following features have been implemented:

- SPO2 (+/- 2%) and HR (+/-10 bpm) measurement on software triggered by an SPO2 button.

- Separate IP addresses for Camera feed and temperature data

- Each camera, WorkSafe device, etc. once configured, is saved permanently in the software, until deleted by the user. Hence, no need to enter or remember IP addresses again and again.

- Face Recognition.

- Attendance recording

- Video recording and saving

- Live Surveillance with camera feed rotation

- Auto mode: Automatically identifies if a person is standing in front of the device and takes measurements. No button pressing or human intervention is required.

- Any surveillance/USB/IP/Web camera can be used and saved with our software, apart from the WorkSafe device.

- Multiple camera feeds can be viewed at the same time.

- Temperature data from the WorkSafe device can be seen in the Software.

All details on how to install this software, use it, install its dependencies, etc. have been explained in the readme file of the software's GitHub repository, which is also provided in the attachments section. It also contains details on what each directory in the repository does or stores. There are two main run files, one opens the main GUI (WorkSafe.py) and the other opens a GUI to capture and store faces in the face recognition database of the main GUI software (batchPhoto.py). This feature is used for keeping a record of the vital stats of a particular person over time. It can also be used as a biometric system for workplaces.

The github repository has been included in the attachments section. Now that everything was set, it was time to test the entire system together!

WORKSAFE DEMO VIDEO

As you can see, the entire system performed pretty well in an actual, practical scenario as well. The inference speed could still be improved, but it takes time because it takes a lot of frames (around 250) and analyses them together to identify skin colour variations in consecutive frames. I tested it similarly on other people as well and got similar results - the spo2 accuracy was around 2%, while the HR was accurate up to 10-20 bpm depending on the person and his body condition. Lighting also had a major effect on both these parameters. Hence, it is recommended to keep the subject's face well-lit with a light source. There should not be any light source directly inside the scene of the camera otherwise it gives erroneous results.

We have received a very good result on our first test batch of almost 20 people, including 8 corona patients, with SPO2 levels ranging from as low as 86% to as high as 98%. Almost all our predictions are within +/- 2% of SPO2 percentage of the subject when measured by a contact-based pulse oximeter. The results are shown in the graphs below:

Here is a demo on a patient having very low spo2 levels (86%) We tested it on a barebone version of our full software that we are currently developing to be able to run even on low-level single board computers like RPi, Utra96 V2 or the Jetson Nano to create a variant that can do the work of the Node MCU, ESP32 Cam and the Laptop device on its own.

SPO2 measurement on a hypoxia patient using a barebone version of our algorithm

Implementation and future goals

We have planned to develop this technology further. There are still a lot of limitations we wish to improve:

- The PCB layout can be improved further to include a bigger battery.

- A one-device variant that uses a single board computer to perform all tasks is being developed. This variant will use a low-cost USB camera like the Lepton 3.5 and a NIR camera to measure the remaining parameters like RR and RRV. This device will also have a greater range and will be more robust to lighting conditions as the r-PPG measurement will be done using NIR or Night Vision camera.

- We are currently in the process of getting permission to test our algorithm on a larger batch of patients. Once verified, we will apply for CE, ISO and FDA certifications.

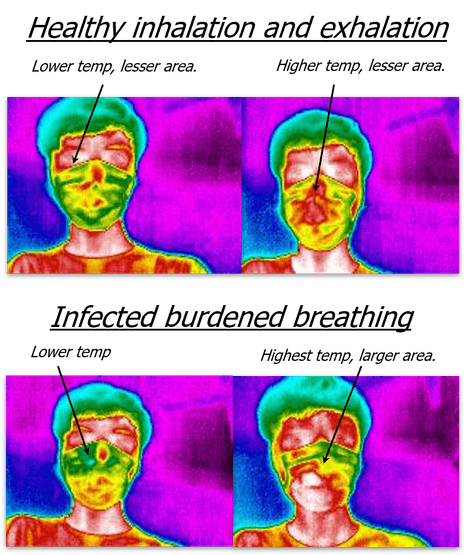

- We are also researching other possibilities like detecting symptoms using the fluctuations in a combination of these parameters or using thermal images of particular body parts to diagnose a few non-critical but common diseases like Diabetes (which is known to change the temperature map of an infected person's tongue)

- Currently, this tech and product are still at a nascent stage. Despite the small validation phase we conducted, the results are not fully conclusive. Hence, do not use this to treat any patient.

- That being said, we have already started conducting pilot tests in a few non-critical areas like residences and workplaces. We have received good feedback and we strive to improve our device even further to the point where it is market-ready!

Pls like my project.