Story

I always wanted to work on a gesture-controlled robot that can be controlled by my changing the orientation of my hands.

I used an M5Stack ESP32 board which contains an in-build IMU sensor for detecting the gestures. The gestures are detected using the accelerometer. The acceleration in the X and Y axis data are send to the onboard EPS32 of the robot using the ESP-NOW protocol.

Components Used

M5Stack AWS IOT Edukit Core2

The Core2 for AWS comes with an ESP32-D0WDQ6-V3 microcontroller. The main unit is equipped with a 2.0-inch capacitive touch screen that provides a smooth and responsive human-machine interface. It also comes with an MPU6886 which provides a 6-axis internal IMU.

I have used the IMU and the Screen for this project. The accelerometer of the IMU is used to get the tilt direction of the M5stack device.

ESP32

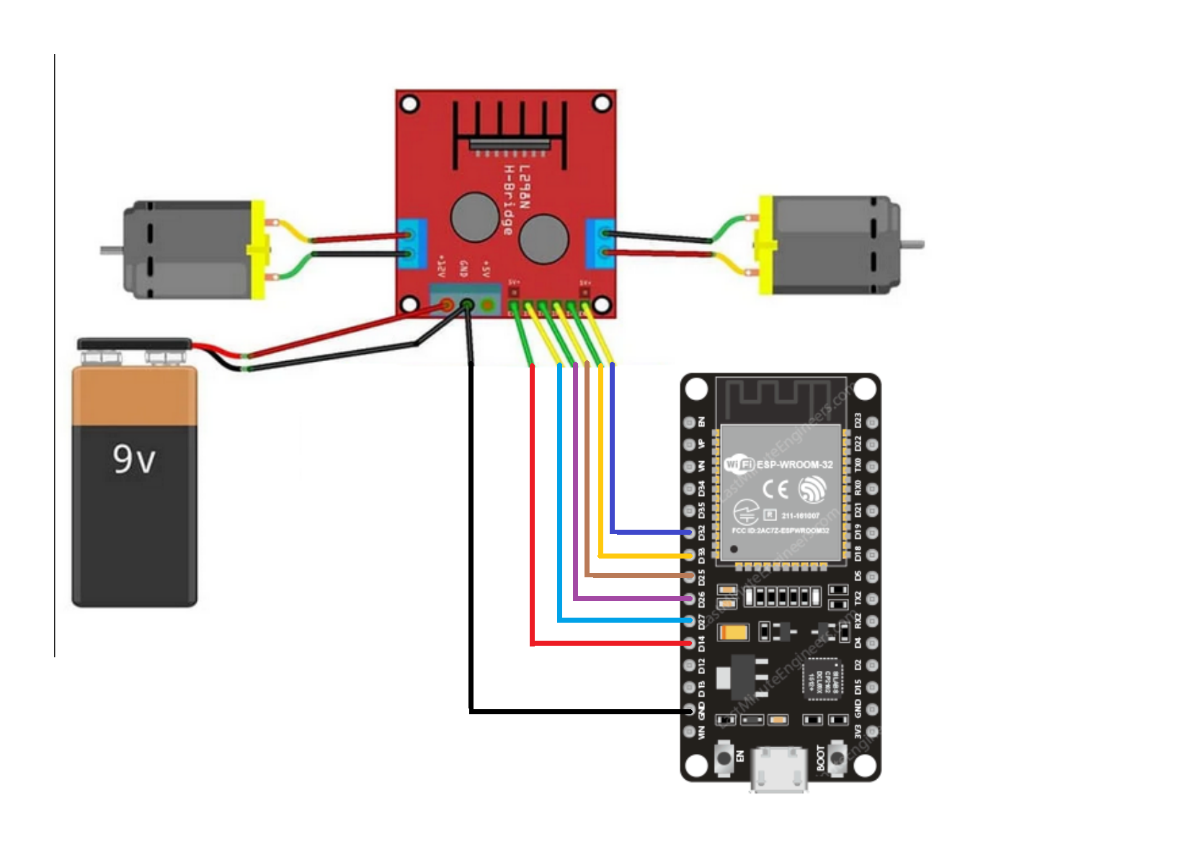

Circuit Connection

ESP 32 Pins < -- > L298n Motor Driver

GPIO 14 < -- > Motor 1 PWM

GPIO 27 Motor 1 IN1

GPIO 26 < -- > Motor 1 IN2

GPIO 25 < -- > Motor 2 IN3

GPIO 33 < -- > Motor 2 IN4

GPIO 32 < -- > Motor 2 PWM

ESP-NOW Protocol

I have used the ESP-NOW protocol for sending the Data from the Edukit to the ESP32 for controlling the robot.

.png)

I have used the One-way Communication of ESP-NOW Protocol for sending the data. This configuration is very easy to implement and it is great to send data from one board to the other like sensor readings or ON and OFF commands to control GPIOs.

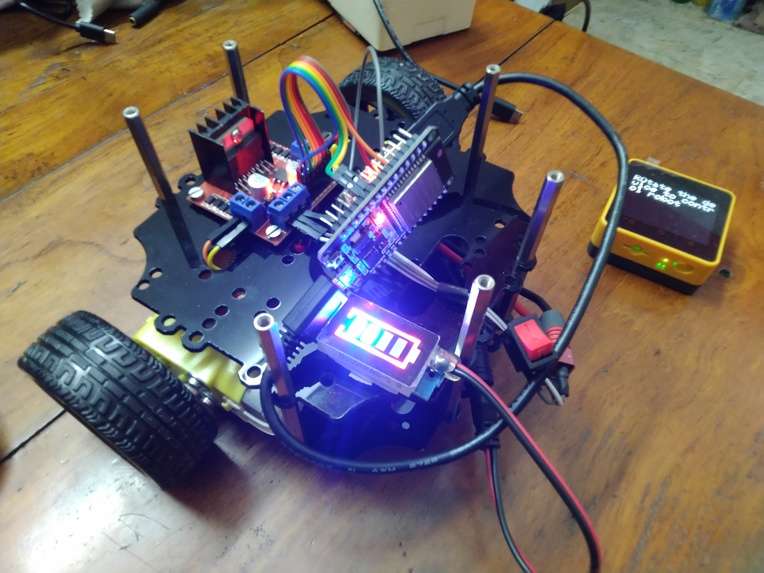

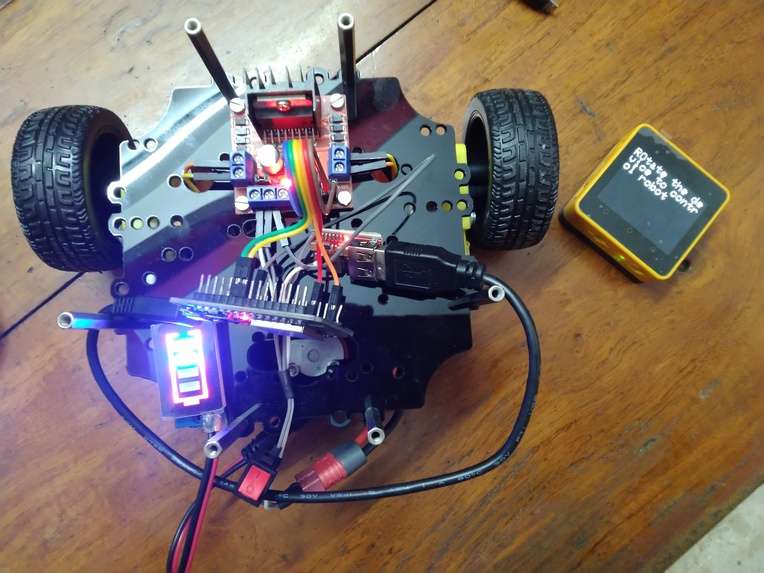

Result

.jpg)