- Firstly Try to interface the Pi Camera module with Raspberry pi. Refer the following link to learn how to Interface the Camera with Raspberry pi: https://projects.raspberrypi.org/en/projects/getting-started-with-picamera/0

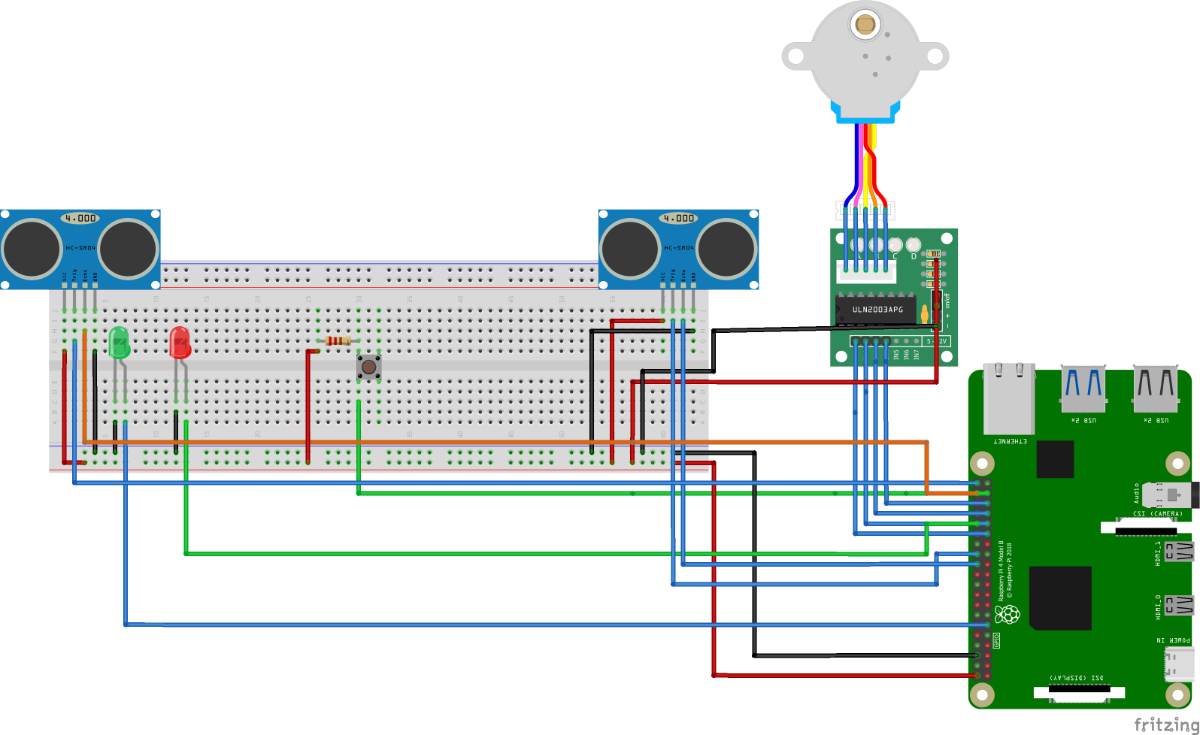

- Make the required connections as given in the images.

- In the above image we haven't specified the Pi Camera module but It should also be present.

- To capture an image of object we need to press a push button by placing the garbage in front of camera.

- Green led will turn on and indicate the image has been captured. 3. Once the image has been captured, we use the OpenCV library to preprocess the image.

- After preprocessing , we use a pre-defined neural network to detect the object in the image.

- A blob is created from the image with specified dimensions. The blob is then passed through a neural network to get the prediction.

- The motor then is given a signal to rotate in specific direction according to the waste detected. It moves in clockwise direction if garbage detected is biodegradable otherwise in anti-clockwise direction.

- The ultrasonic sensor gives an indication if the dustbin is full with garbage. A red led will glow if the garbage is full.

- Now, Lets begin the coding part.

- Import the necessary libraries for GPIO, camera, time, OpenCV, NumPy, matplotlib etc. If you get any module not found error then use pip installation procedure to install the missing libraries.

import RPi.GPIO as gpio

from picamera import PiCamera

import time

from time import sleep

import sys

import serial

import os

import cv2

import numpy as np

import urllib

import matplotlib.pyplot as plt

from IPython import get_ipython

ipython_shell = get_ipython()11. Define the pins and channels for the ultrasonic sensor, motor driver, and LEDs.

12. Now, Set up the GPIO mode and initialize the pins and channels as input or output.

p = 37

out = 11

p_btn = 37

led = 11

arduino = 32

gpio.setmode(gpio.BOARD)

gpio.setup(p,gpio.IN)

gpio.setup(out,gpio.OUT)

motor_channel = (29,31,33,35)

motor_channel_reversed = (35,33,31,29)

gpio.setup(motor_channel, gpio.OUT)

gpio.setwarnings(False)

gpio_TRIGGER = (40,26)

gpio_ECHO = (38,24)

gpio.setup(arduino,gpio.OUT)

gpio.setup(gpio_TRIGGER, gpio.OUT)

gpio.setup(gpio_ECHO, gpio.IN)

camera = PiCamera()13. don't get confused here with any variable named "arduino". We are not using any Arduino here the reason behind this is that the code was reused on our previous project and was used here again with some more changes without changing the variable name.

14. Now we will define a function to measure the distance using the ultrasonic sensor.

- Set the trigger pin to high and wait for a short time.

- Set the trigger pin to low.

- Measure the time it takes for the echo pin to go from low to high.

- Calculate the distance using the formula:

distance = (time * speed of sound) / 2

def distance():

TimeElapsed = [0,0]

distance = [0,0]

# set Trigger to HIGH

for i in range(2):

gpio.output(gpio_TRIGGER[i], True)

# set Trigger after 0.01ms to LOW

time.sleep(0.00001)

gpio.output(gpio_TRIGGER[i], False)

StartTime = time.time()

StopTime = time.time()

# save StartTime

while gpio.input(gpio_ECHO[i]) == 0:

StartTime = time.time()

# save time of arrival

while gpio.input(gpio_ECHO[i]) == 1:

StopTime = time.time()

# time difference between start and arrival

TimeElapsed[i] = StopTime - StartTime

# multiply with the sonic speed (34300 cm/s)

# and divide by 2, because there and back

distance[i] = (TimeElapsed[i] * 34300) / 2

return list(distance)

15. Now we will define a function to detect objects in an image using a pre-trained neural network.

- Create a blob from the image with the specified dimensions.

- Pass the blob to the network and get the predicted objects.

- Return the object.

def detect_objects(net, im):

dim = 200

# Create a blob from the image

blob = cv2.dnn.blobFromImage(im, 1.0, size=(dim, dim), mean=(0,0,0), swapRB=True, crop=False)

# Pass blob to the network

net.setInput(blob)

# Peform Prediction

objects = net.forward()

return objects16. Now we will define a function to display the class name and bounding box of the detected objects on the image.

- For each detected object, get the class id, confidence score, and coordinates.

- If the score is higher than a threshold, display the class name and bounding box on the image.

- Convert the image to RGB and display it using matplotlib.

def display_text(im, text, x, y):

# Get text size

textSize = cv2.getTextSize(text, FONTFACE, FONT_SCALE, THICKNESS)

dim = textSize[0]

baseline = textSize[1]

# Use text size to create a black rectangle

cv2.rectangle(im, (x,y-dim[1] - baseline), (x + dim[0], y + baseline), (0,0,0), cv2.FILLED);

# Display text inside the rectangle

cv2.putText(im, text, (x, y-5 ), FONTFACE, FONT_SCALE, (0, 255, 255), THICKNESS, cv2.LINE_AA)

#print("Objects:",text)

global string_obj

string_obj = str(text)

def display_objects(im, objects, threshold):

rows = im.shape[0]; cols = im.shape[1]

# For every Detected Object

for i in range(objects.shape[2]):

# Find the class and confidence

classId = int(objects[0, 0, i, 1])

score = float(objects[0, 0, i, 2])

# Recover original cordinates from normalized coordinates

x = int(objects[0, 0, i, 3] * cols)

y = int(objects[0, 0, i, 4] * rows)

w = int(objects[0, 0, i, 5] * cols - x)

h = int(objects[0, 0, i, 6] * rows - y)

# Check if the detection is of good quality

if score > threshold:

display_text(im, "{}".format(labels[classId]), x, y)

cv2.rectangle(im, (x, y), (x + w, y + h), (255, 255, 255), 2)

# Convert Image to RGB since we are using Matplotlib for displaying image

mp_img = cv2.cvtColor(im, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(10,10))

plt.imshow(mp_img)

#plt.show()

cv2.waitKey(0)

cv2.destroyAllWindows()17. Now we will define a function to rotate the motor in clockwise direction for a fixed number of steps.

- Set the motor pins to the correct sequence for clockwise rotation.

- Repeat for a fixed number of steps and delay for a short time between each step.

def motor_rotation(motor_direction):

for i in range(0,1000):

gpio.output(led,True)

if(motor_direction == 'c'):

#print('motor running clockwise\n')

gpio.output(motor_channel, (gpio.HIGH,gpio.LOW,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel, (gpio.HIGH,gpio.HIGH,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel, (gpio.LOW,gpio.HIGH,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel, (gpio.LOW,gpio.HIGH,gpio.HIGH,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel, (gpio.LOW,gpio.LOW,gpio.HIGH,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel, (gpio.LOW,gpio.LOW,gpio.HIGH,gpio.HIGH))

sleep(0.0009)

gpio.output(motor_channel, (gpio.LOW,gpio.LOW,gpio.LOW,gpio.HIGH))

sleep(0.0009)

gpio.output(motor_channel, (gpio.HIGH,gpio.LOW,gpio.LOW,gpio.HIGH))

sleep(0.0009)

elif(motor_direction == 'a'):

#print('motor running anti-clockwise\n')

gpio.output(motor_channel_reversed, (gpio.HIGH,gpio.LOW,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.HIGH,gpio.HIGH,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.LOW,gpio.HIGH,gpio.LOW,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.LOW,gpio.HIGH,gpio.HIGH,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.LOW,gpio.LOW,gpio.HIGH,gpio.LOW))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.LOW,gpio.LOW,gpio.HIGH,gpio.HIGH))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.LOW,gpio.LOW,gpio.LOW,gpio.HIGH))

sleep(0.0009)

gpio.output(motor_channel_reversed, (gpio.HIGH,gpio.LOW,gpio.LOW,gpio.HIGH))

sleep(0.0009)

gpio.output(led,False)18. Finally we will code the main program loop which will:

- Capture an image from the camera.

- Detect objects in the image and display them on the image.

- If a certain object is detected, rotate the motor in clockwise direction for a fixed number of steps.

- Measure the distance using the ultrasonic sensor.

- If the distance is below a certain threshold, turn on the LED.

- Wait for a short time before repeating the loop.

try:

while(True):

time.sleep(0.9)

if(gpio.input(p)==True):

gpio.output(out,True)

camera.resolution = (1280,720)

camera.rotation = 180

time.sleep(2)

filename = "/home/pi/Object_Detection/images/img100.jpg" # it's any random file location

camera.capture(filename)

print("Done")

gpio.output(out,False)

modelFile = "models/ssd_mobilenet_v2_coco_2018_03_29/frozen_inference_graph.pb"

configFile = "models/ssd_mobilenet_v2_coco_2018_03_29.pbtxt"

classFile = "coco_class_labels.txt"

if not os.path.isdir('models'):

os.mkdir("models")

if not os.path.isfile(modelFile):

os.chdir("models")

# Download the tensorflow Model

urllib.request.urlretrieve('http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v2_coco_2018_03_29.tar.gz', 'ssd_mobilenet_v2_coco_2018_03_29.tar.gz')

# Uncompress the file

get_ipython().system('tar -xvf ssd_mobilenet_v2_coco_2018_03_29.tar.gz')

# Delete the tar.gz file

os.remove('ssd_mobilenet_v2_coco_2018_03_29.tar.gz')

# Come back to the previous directory

os.chdir("..")

with open(classFile) as fp:

labels = fp.read().split("\n")

#print(labels)

# Read the Tensorflow network

net = cv2.dnn.readNetFromTensorflow(modelFile, configFile)

FONTFACE = cv2.FONT_HERSHEY_SIMPLEX

FONT_SCALE = 0.7

THICKNESS = 1

im = cv2.imread('/home/pi/Object_Detection/images/img100.jpg')

objects = detect_objects(net, im)

display_objects(im, objects,0.59)

print("detected object is a" ,string_obj)

if((string_obj=='apple')or(string_obj=='banana')):

motor_rotation('c')

[dist1,dist2] = distance()

print ("Measured Distances = {:.1f},{:.1f} cm".format(dist1,dist2))

else:

motor_rotation('a')

[dist1,dist2] = distance()

print ("Measured Distances = {:.1f},{:.1f} cm".format(dist1,dist2))

if(dist1<10 or dist2<10):

gpio.output(arduino,True)

else:

gpio.output(arduino,False)

except KeyboardInterrupt:

gpio.cleanup()

sys.exit(0)

except NameError:

gpio.cleanup()

sys.exit(0)

Images of the Final Product:

Images of Final Output:

Video

Conclusion:

The following are the conclusions made from this project:

1. Current smart segregator dustbin made in this project is very expensive for operation on a commercial scale. It is only for prototype purposes or for showing the proof-of-concept.

2. It can segregate waste between biodegradable and non-biodegradable efficiently with the help of image detection.